GABRIEL

O'FLAHERTY-CHAN

Toronto-based iOS developer at Shopify, with roots in product design and UX. Currently making a procedurally generated universe sandbox and posting semi-frequent gif updates here.

Contact: hi@gabrieloc.com

Twitter:@_gabrieloc

Most Recent Project

"CHAOTIC ERA", an RTS game set inside a procedurally generated universe sandbox. One year in.

Exactly a year ago, I chose to once again try my hand at making a game, and over the course of the year, I’ve tweeted biweekly progress updates in gif format. Here are some of the highlights.

Im making a game pic.twitter.com/u4GWBviSke

— GABRIEL OFLAHERTY CHAN (@_GABRIELOC) October 18, 2017

On-Demand Everything

From the start, I knew I had to think optimization-first. The universe is kind of big, so I kicked things off by investigating how to only show small parts of it at a time. The first step towards this led to implementing an octree, which was great for very quick access to only visible parts of the universe, enabling selective instantiation of objects in the scene graph. I don’t think Unity would’ve been too happy about instantiating trillions of game objects.

selective subdivision in the octree, will be necessary for varying levels of detail (lots of space in space) #gamedev pic.twitter.com/x1Lhr689B3

— GABRIEL OFLAHERTY CHAN (@_GABRIELOC) October 23, 2017

This ended up lending itself really well to how the layer backing the scene graph was modelled as well. For example, if only 1 galaxy is going to be visible at a given moment, we shouldn’t also need to create the trillions of other galaxies that would also potentially exist, or the millions of stars in each of those galaxies.

out of necessity, I'm back on optimization problems in an effort to put stars in the sky.

— GABRIEL OFLAHERTY CHAN (@_GABRIELOC) July 6, 2018

this means on demand loading of super thin slivers of the universe graph now

hopefully non-debug visuals soon#gamedev pic.twitter.com/xlLisrNx1s

Where this fell apart was the assumption that everything was physically static. Because the universe is constantly expanding and it’s bodies in perpetual motion, assuming a static box should contain each body was not going to be an option.

some more debug visuals: planet with orbiting moons -> neighbouring planets -> nearby stars #gamedev pic.twitter.com/mLr9NLkFIO

— GABRIEL OFLAHERTY CHAN (@_GABRIELOC) August 28, 2018

The next iteration removed binary partitioning altogether, in favour of the gravitational relationships between parent and child bodies. For example, as Earth is a parent of its one moon, it’s also a child of the sun, which is (ok, was) a child of a larger cluster of stars. Each body defines a “reach”, which encapsulates the farthest orbit of all its children, and given a “visibility distance” (ie. max distance in which objects can be viewed from the game’s camera), visible bodies are determined by the intersection of two spheres, one located at the body’s center with a radius of its reach, and one at the camera’s center with a radius of its visibility distance.

This design enabled parent and child bodies and their siblings to be created at any point, and released from memory when no longer visible.

7 Digits of Precision Will Only Go so Far

While exploring the on-demand loading architecture, I quickly ran into an issue: objects in my scene would jump around or just stop showing up altogether. When I opened up the attributes inspector panel in the editor, I started seeing this everywhere:

Because Vector3 uses the float type (which is only precise to 7 digits), any object in the scene with a Vector3 position containing a dimension approaching 1e+6 started to behave irradically as it lost precision.

Visualizing floating point precision loss, or "why #gamedev stuff behaves badly far from the origin"

— 🏳️🌈Douglas🏳️🌈 (@D_M_Gregory) September 23, 2018

The blue dot is end of the desired vector. The black dot snaps to the closest representable point (in a simplified floating point grid with only 4 mantissa bits) pic.twitter.com/o1HKYByIbS

Since I was using Vector3 for both data modelling and positions of objects in the scene (no way around that), my kneejerk reaction was to start by defining my own double-backed vector type which would at least double the numbers I could use for modelling. This got me a bit further but still wasn’t addressing how those values would be represented in the scene. At this point I was rendering the entire universe in an absolute scale where 1 unity unit equaled a certain number of kilometers, resulting in values way beyond 16 digits of precision. Fiddling with the kilometer ratio wasn’t going to solve this problem, as objects were always way too small or way too far away.

// In model land...

body.absolutePosition = body.parent.absolutePosition + body.relativePosition

// In scene land...

bodyObject.transform.position = body.absolutePosition * kmRatio;

One solution was to instead use a “contextual” coordinate system. Because the gameplay oriented around one body at a time, I could simply derive all other body positions relative to the contextual body. In other terms, the contextual body would always sit at (0,0,0), and all other visible bodies would be relatively nearby. And because the camera would always be located near the contextual body (focusing on one body at a time), as long as my visible distance was well within the 7 digit limit of float, I could safely convert all double vectors into Vector3s, or even scrap use of double in this context entirely.

var ctxPos = contextualBody.absolutePosition;

var bodPos = body.absolutePosition;

bodyObject.transform.position = ctxPos - bodPos;

// Huge loss of precision

This ended up working until it didn’t, which was very soon. Here’s a fun little phenomenon:

(1e+16 + 2) == (1e+16 + 3)

// false

(1e+17 + 2) == (1e+17 + 3)

// true

Despite using this “contextual position” for scene objects, the actual values being calculated were still being truncated pretty badly even before being turned into Vector3s, whenever the contextual position was a large enough value (ie. containing a dimension approaching or exceeding 1e+17). My kneejerk was to once again supersize my custom vectors and turn all those doubles into decimals to get even more precision (~29 digits). This felt extremely inelegant and lazy.

With the goal of making all position values as small as possible, I decided to just scrap the universe-space absolutePosition design altogether, in favour of something a little more clever and cost-effective.

One way to avoid absolutePosition in contextual position calculation was to rely instead on relational information, such as the position of a body relative to it’s parent. Since absolutePosition could be derived by crawling up the relation graph and summing relative positions, the same value could be calculated by instead finding the lowest common ancestor of both contextual and given body, and calculate their distance relative to it. Effectively, shortening the resulting value significantly.

var lca = lowestCommonAncestor(contextualBody, body);

var ctxPos = contextualBody.relativePosition(relativeTo: lca);

var bodPos = body.relativePosition(relativeTo: lca);

// if body == contextualBody, this is (0,0,0)

bodyObject.transform.position = contextualBodyPosition - bodyPosition;

The result? Values well below that 7 digit ceiling! 🎉

Visuals and Interaction

One of the biggest challenges of this project has been visuals. Specifically choosing the right kind of UI element for interacting with non-conventional types of information, such as small points in 3D space. Over time, this project has seen so many iterations for how to best solve these problems:

a system for interacting with orbiting bodies using callouts #gamedev pic.twitter.com/uwUcph2z0B

— GABRIEL OFLAHERTY CHAN (@_GABRIELOC) September 21, 2018

looping back and forth between 3 levels. equally tedious and rewarding getting to this point #gamedev #fui pic.twitter.com/hDEMnsw6mx

— GABRIEL OFLAHERTY CHAN (@_GABRIELOC) February 10, 2018

Another challenge has been figuring out how to interact with the surface of a planet. Although this gameplay mechanic will likely be cut in favour of a coarser grain level of interaction, a big part of it was figuring out how to evenly distribute points on a sphere, and whether or not that should be done in 3D or in 2D:

this took an embarrassingly long time to figure out, but it actually works now: an infinitely subdivisable icosahedron-backed dual polyhedron made possible using a DCEL

— GABRIEL OFLAHERTY CHAN (@_GABRIELOC) January 10, 2018

now my life sim will have a place to play #gamedev #madewithunity pic.twitter.com/YkaMVtRYcg

improved accuracy of frustum culling by taking into account surface normals #gamedev pic.twitter.com/sBpRL7NRj4

— GABRIEL OFLAHERTY CHAN (@_GABRIELOC) March 10, 2018

small UI update: 3d navigation visualized with a 2d hex grid #gamedev #FUI pic.twitter.com/NmtdN9Im3M

— GABRIEL OFLAHERTY CHAN (@_GABRIELOC) February 18, 2018

Navigating between a contextual body and it’s parent or children is an ongoing challenge, as it’s a constant battle between utility and simplicity, and needless complexity creeps up all the time. For example, the first iteration of navigation had a neat yet unnecessary mosaic of boxes around the top of the screen representing individual bodies that could be navigated to. I decided to dramatically simplify for a few reasons:

- The gameplay doesn’t necessitate travelling between multiple layers at a time (eg. moon -> galaxy)

- The hierarchical structure wasn’t being communicated very well with boxes stacking horizontally and vertically

- Individual boxes didn’t clearly describe the body they represented, and icons weren’t going to be enough.

visuals are a little rough but functionality is all there. here's navigation between different levels of detail based off new architecture.

— GABRIEL OFLAHERTY CHAN (@_GABRIELOC) May 15, 2018

tldr; old graph created top-down, that was too slow, now everything's calculated bidirectionally and on-demand#gamedev pic.twitter.com/Fn2Srd1lJt

The current gameplay now shows one body at a time in the navigation and visible bodies can be interacted with more contextually, via 2D UI following objects in 3D space.

simplified navigation make transitions clearer. should be able to go up and down layers soon #gamedev pic.twitter.com/hwXp14uFov

— GABRIEL OFLAHERTY CHAN (@_GABRIELOC) October 2, 2018

a system for interacting with orbiting bodies using callouts #gamedev pic.twitter.com/uwUcph2z0B

— GABRIEL OFLAHERTY CHAN (@_GABRIELOC) September 21, 2018

What’s next?

With the universe sandbox in a stable place, I’m hoping to shift all energy now towards gameplay and game mechanics. Because I’m hoping to implement a real-time strategy element, I need think about how resources are mined, how territory is captured, how interaction with other players and AI works, and a lot more.

If you’re interested in learning more about this project, email me at hi@gabrieloc.com or contact me on twitter at @_gabrieloc.

Past Project

TV Plan - an AR app to help make TV purchase decisions

I recently made the decision to get a new TV, but couldn’t settle on which size to get. One Saturday morning I came up with the idea to use Apple’s new ARKit tech to help solve this problem, and shortly after TV Plan was born.

In Summer 2017, Apple made it offical that they were getting serious about Augmented Reality. After seeing what the tech was capable of, I realized immediately this was something I wanted to get involved in. Since June, I’ve been learning and tinkering with ARKit to see what it’s capable of, but only recently has an obvious real-world use case come to mind.

An important opportunity I’ve identified has been for simple utilities which solve problems that couldn’t otherwise be addressed without AR. My problem was “will a 55 inch TV look too big in my living room?”.

A lesson learned from this project was the effectiveness of lighting and shadows. Adding a soft shadow behind the TV resulted in geometry looking way less like a superimposed 3D mesh slapped on top of my camera’s feed, and more like something which was actually “there”. Although subtle and not immediately obvious, I expect improvements in light estimation to be critical to the success of AR in the future.

Here’s an example of the TV with and without shadows:

As for next steps, I’ve been collecting feedback online and offline, and will be cycling quick wins into the app in the near future. For example, a common suggestion has been to add support for different TV sizes. Turns out my assumption that TVs max out around 75” was pretty naive 🤓.

Past Project

Making “Giovanni”, a Game Boy Emulator for the Apple Watch

Since getting an Apple Watch last fall, I’ve been disappointed by the lack of content. To help address this, I made my own game a few months ago (a 3D RPG), but obviously it still didn’t address the bigger issue. An idea I had was to port an existing catalog, and emulation made perfect sense.

The result is a surprisingly usable emulator which I’m calling Giovanni after the super-villain from my favourite Game Boy game, Pokemon Yellow. Ironically, I’ve only ever played the game on an emulator, as growing up I didn’t have access to the real deal. In a way, I feel this is my way of giving back to the community.

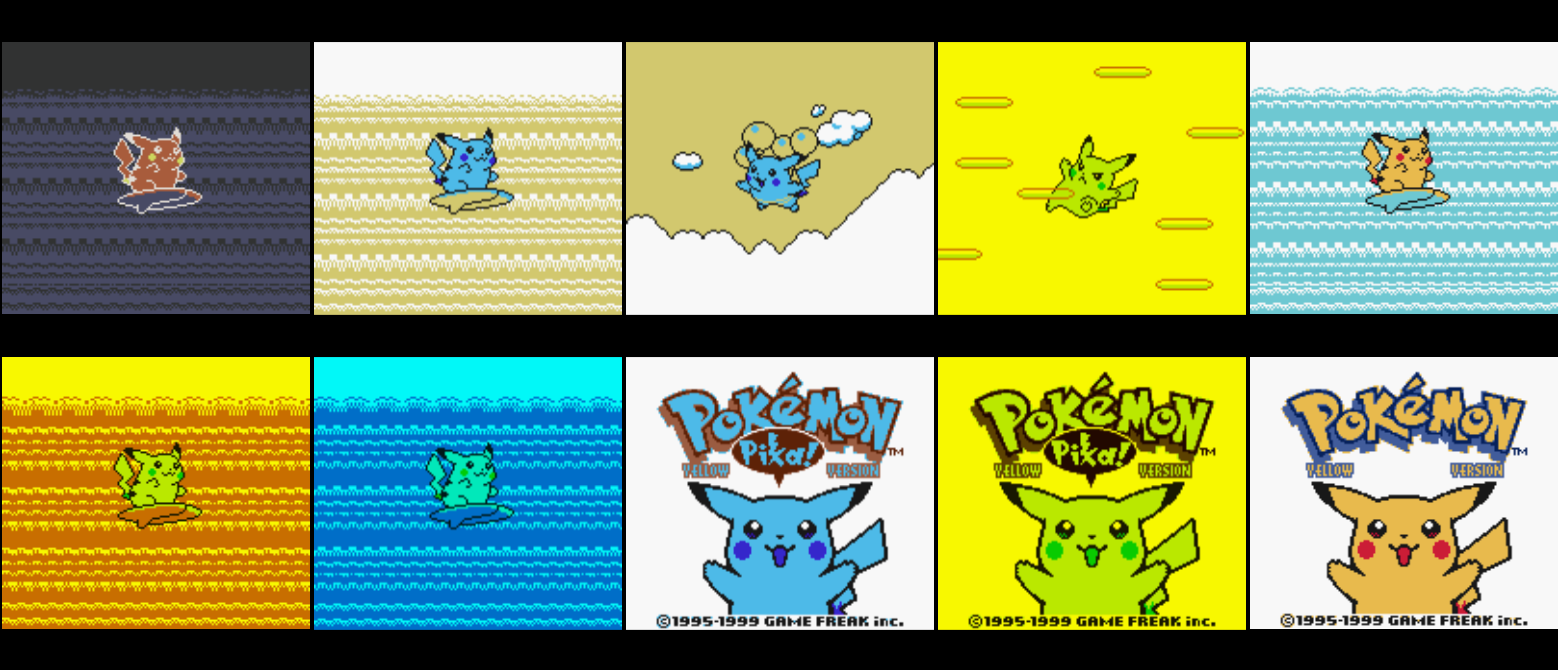

That feeling from accomplishing something you thought was too crazy to actually work is phenomenal. Here’s what Pokemon Yellow looks like on a Series 2 Apple watch:

One of the big challenges was to find the right balance between framerate and performance. As you can see, it’s a bit sluggish and unresponsive, but as a prototype, I think it answers the question of “is this possible”.

A Few Lessons Learned

For the sake of prototyping, I committed to doing as little unnecessary work as possible, so extending an existing emulator was my first approach. However as platform limitations and ubiquities surfaced, I discovered it was way less effort to just start from scratch and only pull in what I needed from open source projects.

My go-to emulator was Provenance, an open-source front-end for iOS. Having made contributions (albeit minor ones) in the past, I was semi-familiar with the codebase. My first approach was to have the watchOS extension ask iOS for a list of games names, then when a game was selected, download it and run locally. The first issue was with WatchConnectivity and Realm. Because Realm requires queries to be made on the main thread, that meant I wouldn’t be able to communicate with iOS unless the app was running, which wouldn’t be an ideal user experience. After investigating having querying Realm without WatchConnectivity and not having much luck, I realized Provenance offered a level of sophistication that wasn’t really necessary at this point.

Taking a step back, I realized Provenance (and others) are just thin layers on top of Gambatte —— where all the actual emulation was being done. After cloning the repo and looking at example code, I realized Gambatte was already extremely high-level, already offering support for loading ROMs, saving/loading, even GameShark.

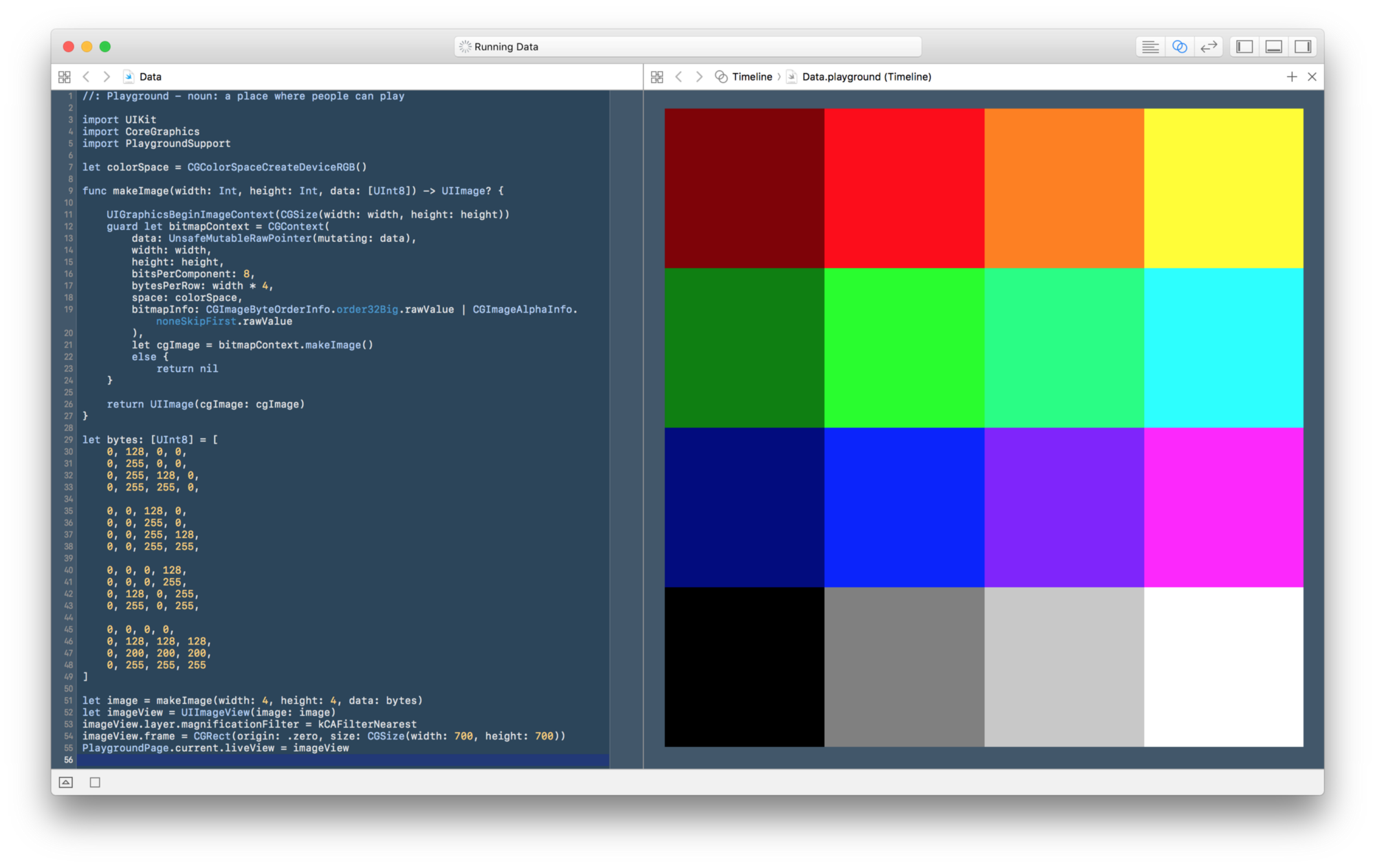

Graphics on watchOS

Oh yeah, up until this point I was working under the fatal assumption that watchOS supported OpenGL or Metal (because of SceneKit). As it turns out, it doesn’t. I imagine if I did this investigation beforehand, it probably would’ve deterred me from even beginning. Nonetheless, I was too far in.

Recalling a Hacker News post about how some guys from Facebook got Doom running on watchOS not too long before, I discovered they were rendering to a UIImage using Core Graphics. Looking at what Gambatte was filling it’s video buffer with revealed that it too was just outputting pixel data.

I realized I didn’t know enough about feeding pixel data into a Core Graphics Context, so I created a Swift Playground to play around with creating images from a pixel buffer. As someone who learns best through trial and error (“what does changing this thing do?”), the ability to get immediate visual feedback was amazing.

Immediately after this exercise, I was finally able to get something on-screen, but the colors were all wrong. By adjusting the byte order and composition options (and with a little more trial and error), I was able to get what finally looked right:

For input, making a button on screen for every single input wasn’t desirable, so I took advantage of gestures and the Digital Crown. By allowing the user to pan on screen for directions, rotate the Digital Crown for up and down, and tap the screen for A, I was able to eliminate buttons until I was left with Select, Start, and B.

Touching the screen for movement isn’t a great interaction, but being able to use the Crown worked out a lot better than originally anticipated. Scrolling through a list of options is basically what the Crown was made for, and if the framerate was even slightly higher, the interaction could almost be better than a hardware D-pad.

I’m anticipating revisiting the project once watchOS4/next generation Apple Watches are out, just to see if there are any opportunities to get a higher framerate. Maybe Apple will even announce Metal for watchOS at WWDC this summer!

——

This project was a ton of fun to work on, and a great reminder that even though unknowns are scary, you really don’t know what’s possible without trying. The end result was way less effort and code than I originally anticipated.

I’m still deciding if it’s worth investing more time into improving performance, but for the sake of getting some feedback, I’ve open sourced everything on Github. If you’re interested in contributing feel free to submit a PR. For any thoughts or feedback, let me know on Twitter at @_gabrieloc or through email.

Past Project

Building a Quadcopter Controller for iOS and Open-Sourcing the Internals

I’ve recently started getting into drones, and like so many others, it all started with cheap toy quadcopters.

For under $50, you can get ahold of a loud little flying piece of plastic from Amazon, and they’re a lot of fun. Some of them even come with cameras and Wi-Fi for control via a mobile app. Unfortunately, these apps are pretty low quality — they’re unreliable and frustrating to use, and look out of place in 2017. The more I used these apps, the more frustrated I got, so I started thinking about ways I could provide a better solution, and two months later I emerged with two things:

1. An iOS app for flying quadcopters called SCARAB, and

2. An open-source project for building RC apps called QuadKit

——

Continue reading this article on Medium, where it was syndicated to HackerNoon.

Past Project

SAQQARA

When I finally got around to picking up an Apple Watch in Fall 2016, one of the first things I noticed was a lack of real games.

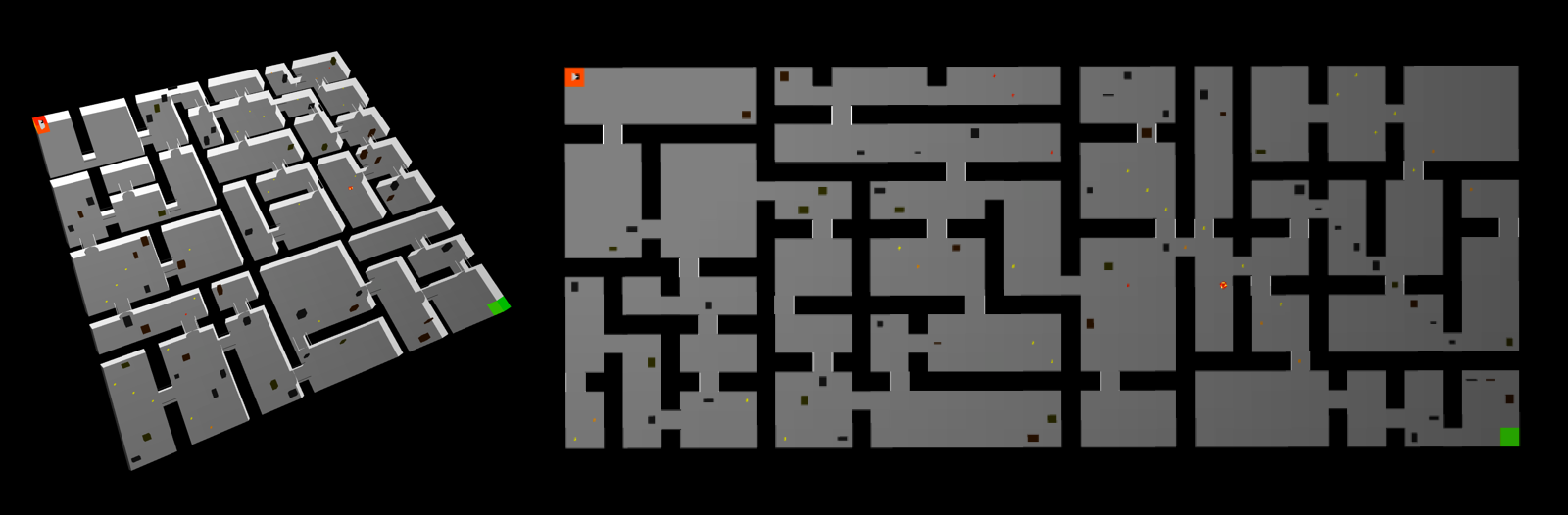

After hearing about Doom running on watchOS earlier in the year, and Apple bringing game technologies to watchOS 3, I spotted an opportunity. Inspired by classic dungeon crawler games, I began working on what would become SAQQARA, an homage to weird DOS games from the late 90s.

While the tech was fun to play around with at first, computational limitations of the platform became apparent early on, bringing perfomance front and center during development.

With Instruments.app totally unsupported for watchOS, I had to more or less improvise with stopwatch-logging methods wrapping heavy operations to identify slow algorithms or inappropriate data types. All in all, an excellent reminder of easy it is to take for granted the (by comparison) ultra-powerful devices we keep in our pockets.

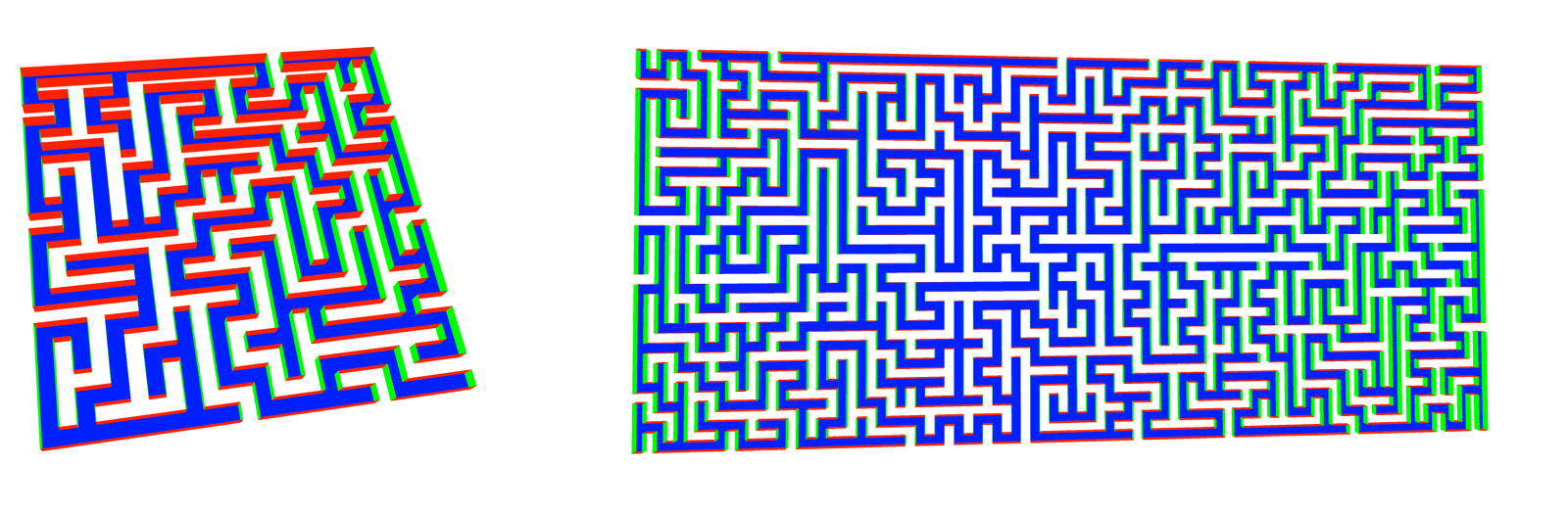

One of the bigger architectural decisions that had to be made early on was which maze-generation algorithm I would use. While prototyping gameplay early on, the first choice was depth-first search, which yielded unpredictable corridors which spanned the area of the map.

This technique was dropped not longer after, as corridors and no rooms soon became tedious in-game, and generating a map with an area greater than 5x5 units would bring the process above the system’s High Water mark, crashing it shortly after launch.

The second option was something closer to recursive division, which was not only far faster, but resulted in a good mix of big and small rooms, separated by short corridors.

For version 1.0, I was adamant on shipping essentials, and adding content iteratively in updates. After being rejected by Apple the first 4 times, SAQQARA went live right before the holiday break. As always, a great reminder to RTFM.

——

06/01/2016 Progress Update

Cleaning Game - Pathfinding

Towards Summer 2016, I began to realize that my Cleaning game was all style and no substance.

I had spent so much time perfecting animations and artwork, but completely ignored game mechanics, or anything that would make the experience entertaining gameplay-wise. As a result, I made the decision to completely reboot the project, dropping SceneKit in favour of something more prototype-friendly: Unity.

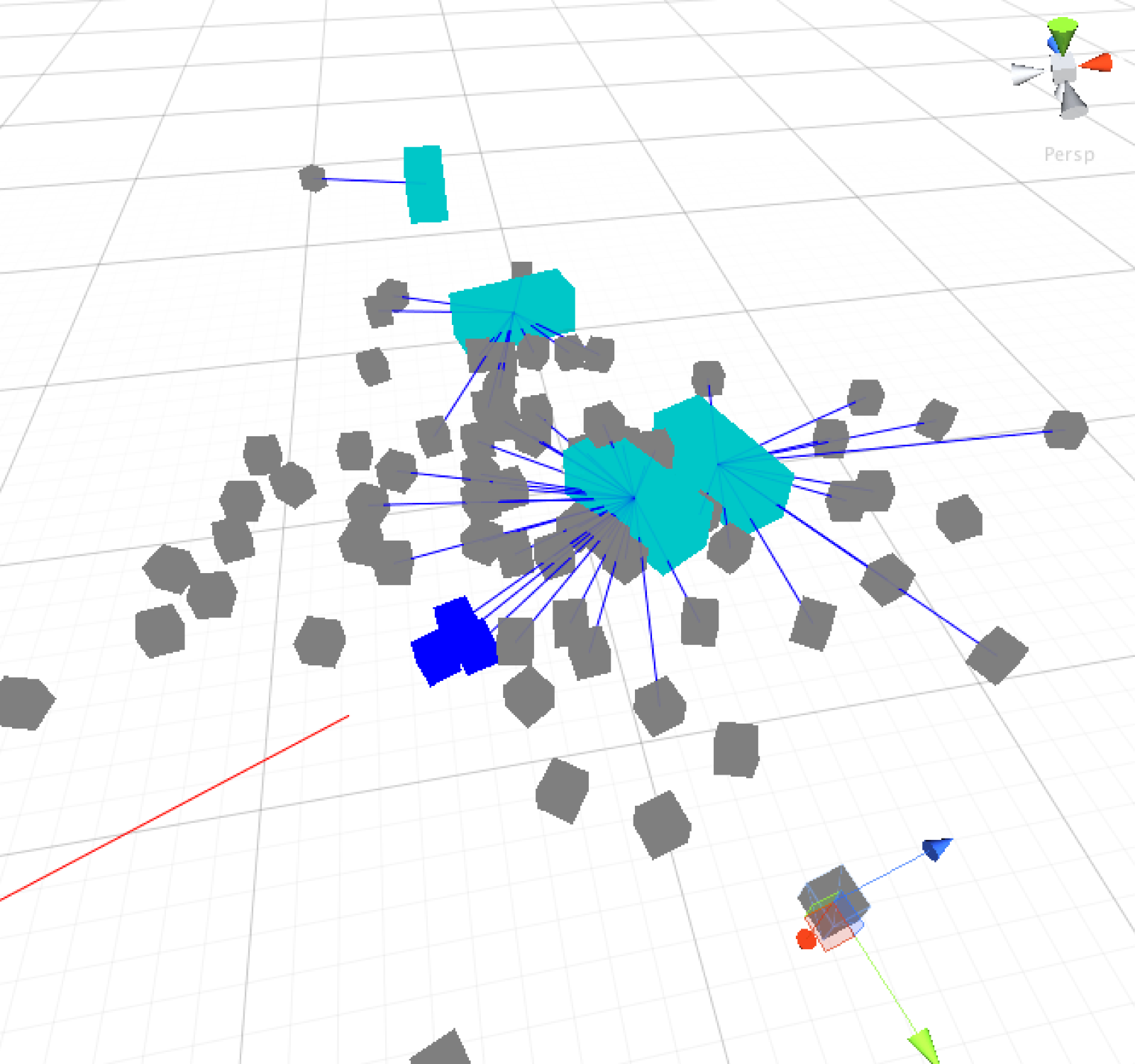

From an empty canvas, the original concept evolved quickly. The first change was to characters: Whereas originally you controlled a single bot, controlling a small swarm instead was more entertaining, and flexible as a mechanic. In an effort to not get distracted by aesthetics whatsoever, I stuck solely to primitive geometry for a while.

Only a week later, I had a prototype which demonstrated bots (represented by gray boxes below) expressing preference for “cleanable” objects (cyan boxes), and properly redistributing themselves according to workload. I strongly feel this exercise would have taken far more time with Apple’s immature game tools.

For all of June, I spent time building basic pathfinding so the bots wouldn’t bump into each other. What I thought would be a simple task, ended up requiring far more complexity than I anticipated (ie. A*), and towards the end, I finally decided to adopt Unity’s builtin Navmesh system instead.

Snapshots taken along the way helped document progress:

03/01/2016 Progress Update

Cleaning Game - Art

In March 2016, I decided to take a look into Apple’s game technologies, SpriteKit and SceneKit, by working on a game with a simple idea: Control a small robot to clean your house.

It was a great exercise in exploring new game mechanics and controls that would be native to mobile touchscreens, rather than porting existing paradigms from other platforms. The first iteration had you control a single robot which could be moved by dragging around on areas of the screen.

The character also had a small car that could be driven around, with the intention of using it to carry around heavy objects.

Past Project

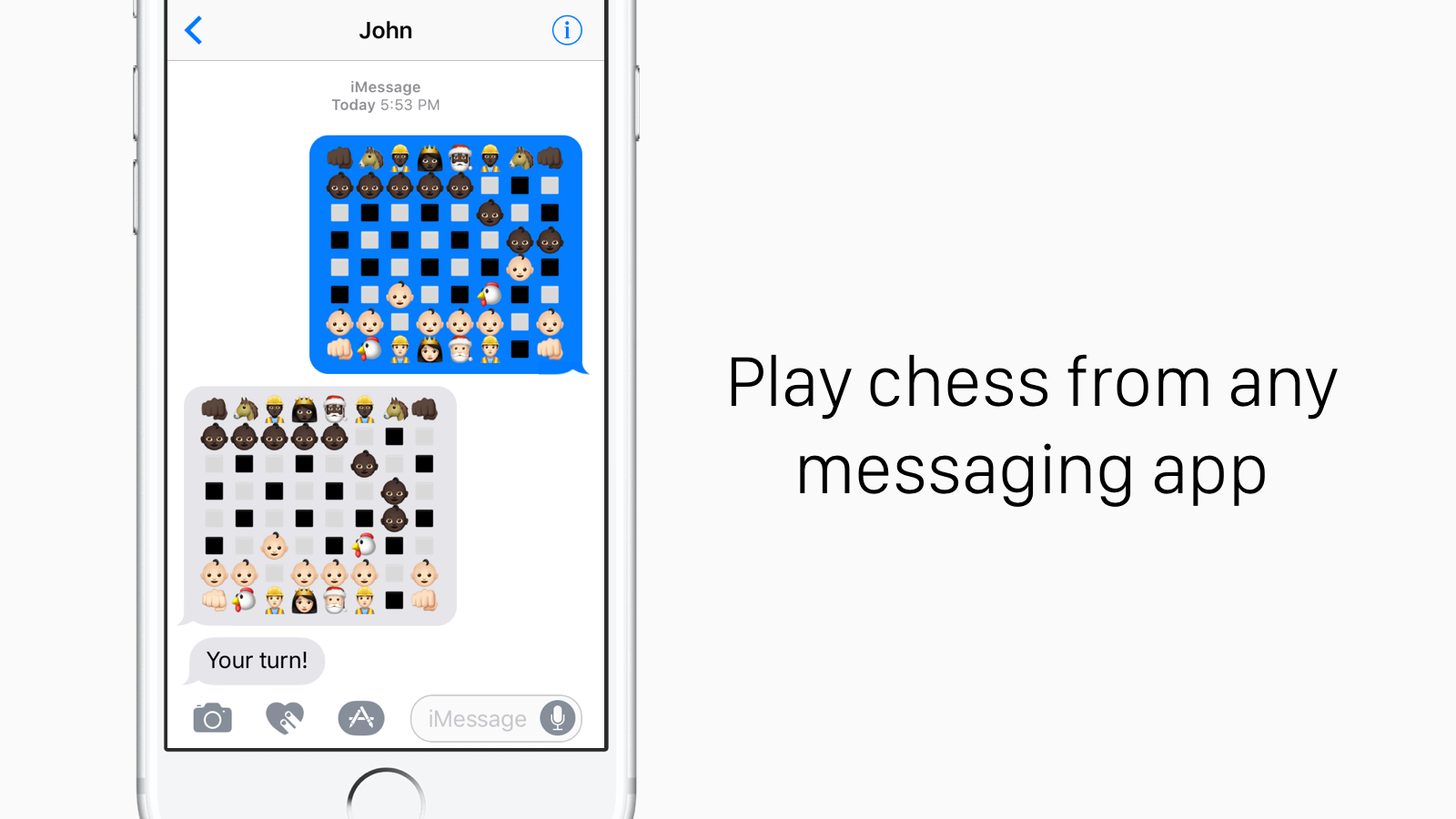

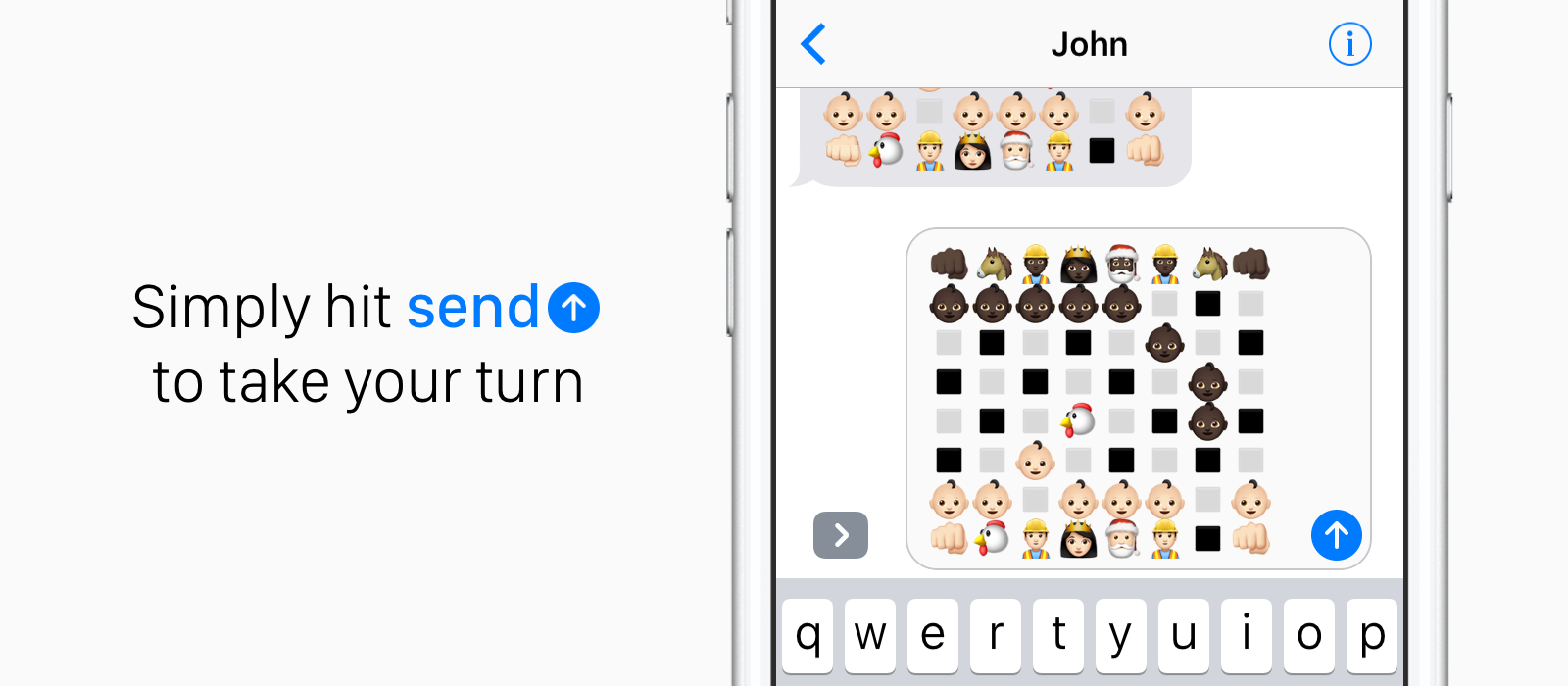

Emoji Chess Keyboard

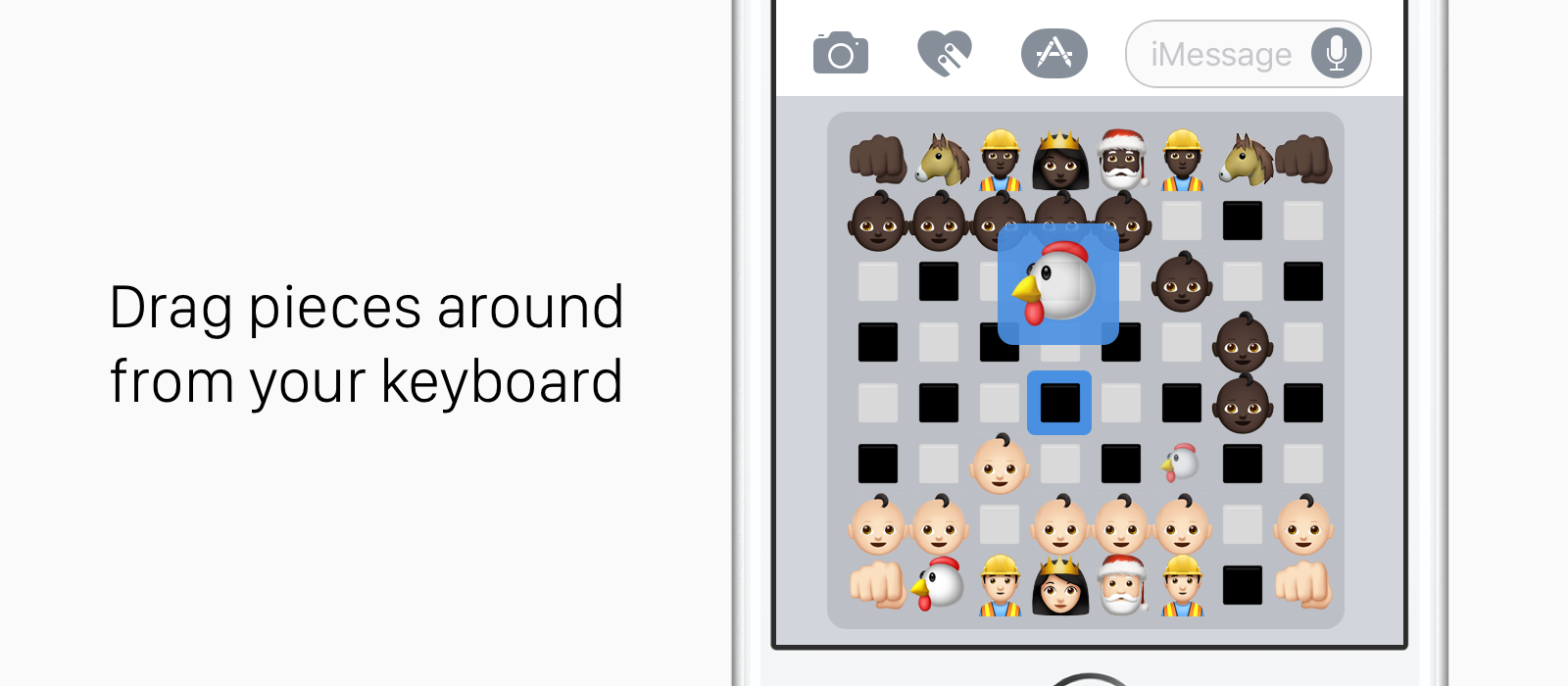

In late 2015, I noticed a set of tweets circulating of people playing chess using Emojis in iMessage.

After trying it out for myself, I beceame immediately exhausted by the process of removing and inserting emojis manually with the Emoji keyboard, and decided it would be less effort to make a tool to do the hard stuff for me. With iOS custom keyboards emerging around that time, I took a weekend off from ALEC to throw together Emoji Chess Keyboard.

Unsure of whether Apple would approve of the concept, I hastily submitted a working prototype, which actually ended up getting approved.

Shortly after, I submitted a second version which then cleaned up some of the rough edges and added polish. Unfortunately, Apple decided they no longer liked the concept, and rejected every re-submission and appeal request I made. I’ve accepted that 1.1 will never see the light of day.

——

More Info:

Past Project

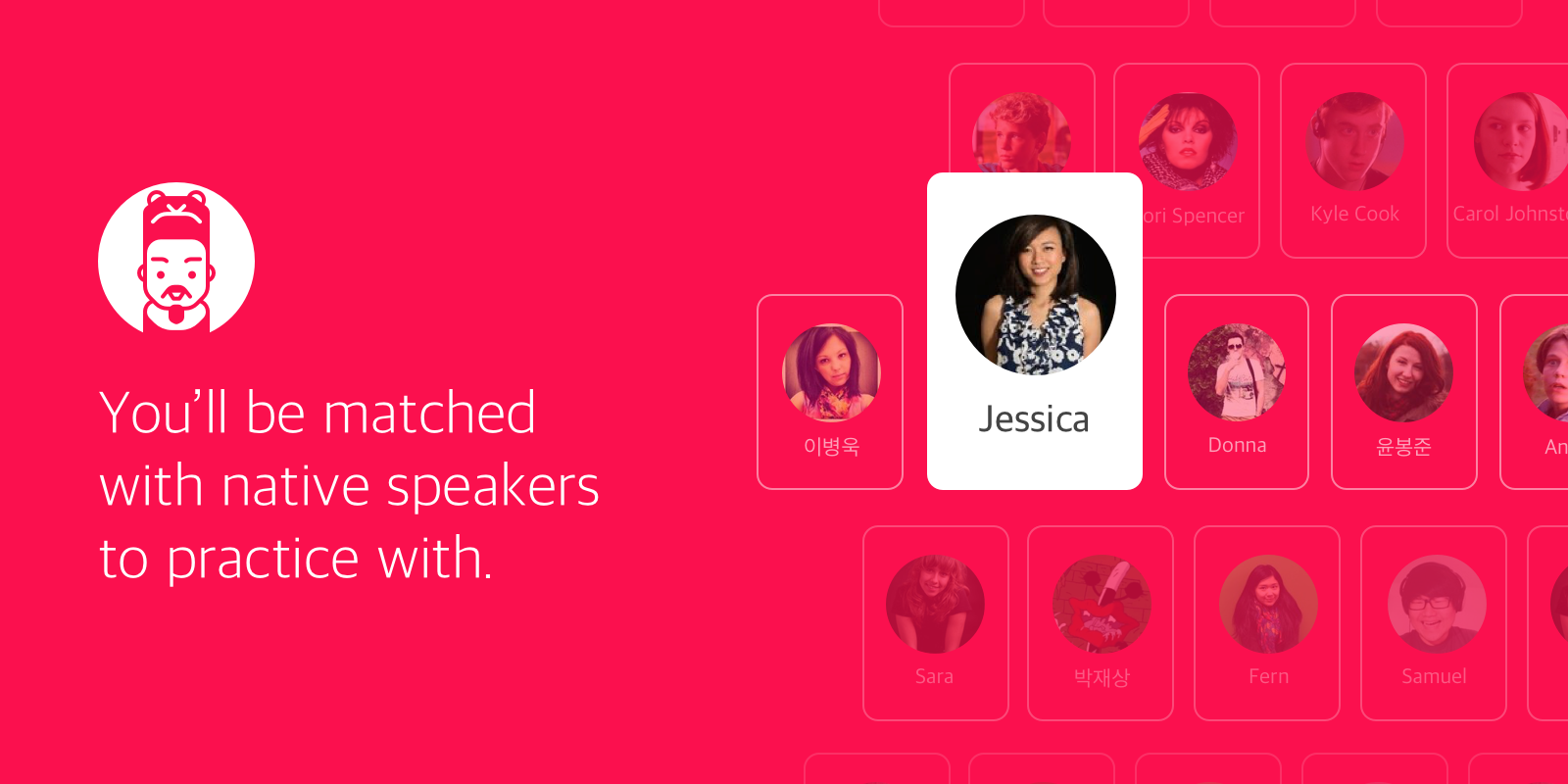

ALEC Korean-English Chat

Towards the end of 2014, I was preparing for a long trip to South Korea.

I build ALEC as a way to prepare for my trip by improving my speaking ability, as well as get my feet wet with some of the new iOS 8 technologies which were being rolled out around the time.

I ended up learning so much that I even wrote a short article on Medium, describing my goals, process, and challenges of the project. It’s about an 8 minute article, and I reccomend checking it out to get a sense of what it’s like building something while living in another country: Making ALEC, a Language Exchange Service Built While Travelling.

——

More Info:

Past Project

MISSI0N

At one point in 2013, I decided I was fed up with sending the same messages to the same people when we’d hang out — “Where are you now”, “Are you close?”, “I’ll be late”, etc.

Our phones all had ways of communicating that information passively anyways, so I figured there might be an opportunity to have an app do the work. The goal was always to make something that was so frictionless to use, that there’d be a greater benefit to using it vs. just sending messages.

I defined two categories of users — “organizers” and “joiners”, and distilled the workflow for organizers down into three easy steps:

Once an organizer would create an event, invited members would all receive push notifications, which would prompt them to either accept or reject the invitation. Upon accepting the invitation, they’d see themselves and other joiners in the app immediately. Not much more to it than that.

Another aspect to events was that they would expire after an hour, so there’d be a clear definition of when your location would be tracked, and when it wouldn’t be.

While everything up until now sounds great, there were serious flaws in the process.

One of them was the fact that locations weren’t really tracked passively, since background refresh didn’t exist at the time, and it was only possible to update locations when the app was in the foreground. This would become an unfortunate restriction that would detract from the app’s overall utility.

In retrospect, one thing I would have done differently would be to design the network stack completely different. Certain aspects of Parse were great, but because it relies on a REST-style API, realtime location updates were slow and innacurate, relying on refreshing after a fixed interval, and only when the app was in the foreground. I suspect a socket-based connection would have resulted in a better user experience.

Almost immediately after launch, iMessage rolled out the ability to send your location, which despite my three simple steps, made it impossible to compete with. While I learned a lot from making Missi0n, resserecting it would require carefully rethinking of goals and objectives.

——

More Info:

Past Project

Wobblr

Wobblr was the first big iOS project I worked on back in 2011, when skeumorphic materials and missing vowels/a trailing “r” were essentially required to get onto the App Store.

It wrapped a long lost open-source synthesizer library, which was highly configured to produce a certain sound. By only exposing a few basic parameters to the user, It was effectively impossible to produce something which didn’t sound good.

In mid-2016, Apple yanked it the App Store since it hasn’t been updated since iOS6. I’d love to get it back up, but the source code is long gone on an old computer!